Operating System Support for Extensible Secure File Systems

A RESEARCH PROFICIENCY EXAM PRESENTED

BY

CHARLES P. WRIGHT

STONY BROOK UNIVERSITY

Technical Report FSL-04-02

May 2004

Abstract of the RPE

Operating System Support for Extensible Secure File Systems

by

Charles P. Wright

Stony Brook University

2004

Securing data is more important than ever, yet secure file systems

still have not received wide use. There are five primary security

considerations: confidentiality, integrity, availability,

authenticity, and non-repudiation. In this paper we analyze file

systems that improve confidentiality and availability. We chose to

focus on these two aspects, because theft of information and

denial-of-service are the two largest costs associated with attacks.

Cryptographic file systems improve the confidentiality of files,

because cleartext information is never stored on disk. One barrier to

the adoption of cryptographic file systems is that the performance

impact is assumed to be too high, but in fact is largely unknown. In

this paper we first survey available cryptographic file systems, and

perform a performance comparison of a representative set of the

systems, emphasizing multiprogrammed workloads. We show the overhead of

cryptographic file systems can be minimal for many real-world

workloads.

We have observed not only general trends with each of the

cryptographic file systems we compared but also anomalies based on

complex interactions with the operating system, disks, CPUs, and ciphers.

Snapshotting and versioning systems increase availability, because

before data is modified or deleted, a copy is made. This prevents an

attacker from destroying valuable data. Indeed, snapshotting and

versioning are useful beyond security applications. Users or

administrators often make mistakes, which result in the destruction of

data; snapshotting or versioning allow simple recovery from such

mistakes. A useful extension of snapshotting is sandboxing: allowing

suspect processes to modify a separate copy of the data, while the

original data remains intact.

We then discuss operating system changes we made to support our

encryption and snapshotting file systems. Finally, we conclude by

suggesting future OS enhancements and potential improvements to

existing cryptographic file systems.

To my family - old and new.

Contents

1 Introduction

2 Cryptographic File System Survey

2.1 Block-Based Systems

2.1.1 Cryptoloop

2.1.2 CGD

2.1.3 GBDE

2.1.4 SFS

2.1.5 BestCrypt

2.1.6 vncrypt

2.1.7 OpenBSD vnd

2.2 Disk-Based File Systems

2.2.1 EFS

2.2.2 StegFS

2.3 Network Loopback Based Systems

2.3.1 CFS

2.3.2 TCFS

2.4 Stackable File Systems

2.4.1 Cryptfs

2.4.2 NCryptfs

2.4.3 ZIA

2.5 Applications

2.6 Ciphers

2.6.1 DES

2.6.2 Blowfish

2.6.3 AES (Rijndael)

2.6.4 Tweakable Ciphers

3 Encryption File System Performance Comparison

3.1 Experimental Setup

3.1.1 System Setup

3.1.2 Testbed

3.2 PostMark

3.2.1 Workstation Results

3.2.2 File Server Results

3.3 PGMeter

3.4 AIM Suite VII

3.5 Other Benchmarks

4 Snapshotting and Versioning Systems Survey

4.1 Block-Based Systems

4.1.1 FreeBSD Soft Updates

4.1.2 Clotho

4.1.3 Venti

4.2 Disk-Based File Systems

4.2.1 Write Anywhere File Layout

4.2.2 Ext3cow

4.2.3 Elephant File System

4.2.4 Comprehensive Versioning File System

4.3 Stackable File Systems

4.3.1 Unionfs Snapshotting and Sandboxing

4.3.2 Versionfs

4.4 User-level Libraries

4.4.1 Cedar File System

4.4.2 3D File System

4.5 Applications

5 Operating System Changes

5.1 Split-View Caches

5.2 Cache Cleaning

5.3 File Revalidation

5.4 On-Exit Callbacks

5.5 User-Space Callbacks

5.6 Bypassing VFS Permissions

6 Conclusions and Future Work

6.1 Snapshotting and Versioning Systems

6.2 Cryptographic File Systems

6.3 Future Work

List of Figures

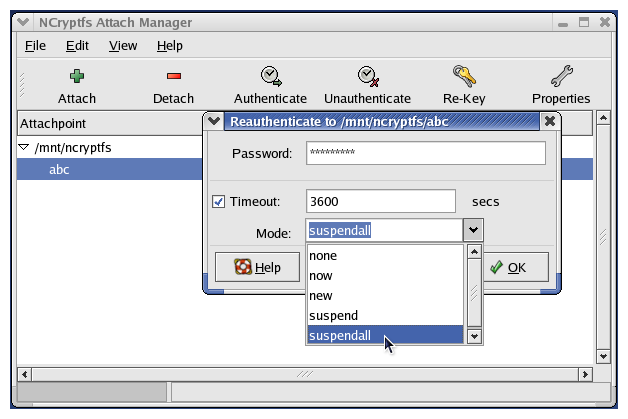

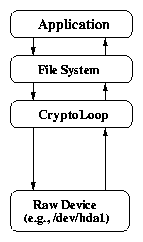

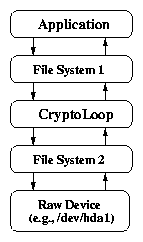

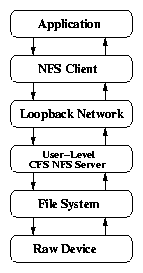

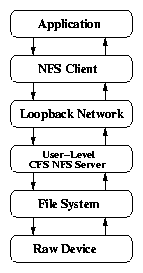

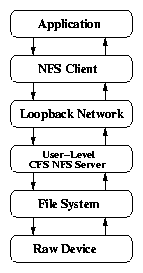

2.1 Call paths of storage encryption systems

(a) Cryptoloop (raw device)

(b) Cryptoloop (file)

(c) CFS

(d) TCFS

(e) Stackable file systems

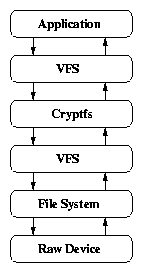

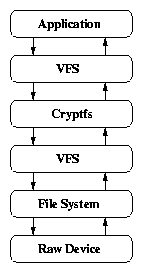

2.2 AES-EME mode

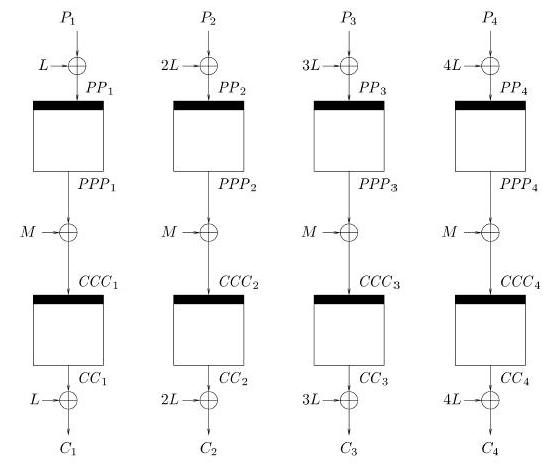

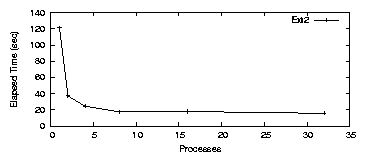

3.1 PostMark: Ext2 elapsed time for the workstation

3.2 PostMark: LOOPDEV for the workstation

(a) Elapsed time

(b) System time

3.3 PostMark: LOOPDD elapsed time for the workstation

3.4 PostMark: LOOPDEV elapsed time for the workstation, with nfract_sync=90%

3.5 PostMark: CFS elapsed time for the workstation

3.6 PostMark: NTFS on the workstation

(a) Elapsed time

(b) System time

3.7 PostMark: NCryptfs Workstation elapsed time

3.8 PostMark: File server elapsed time

(a) One CPU

(b) Two CPUs

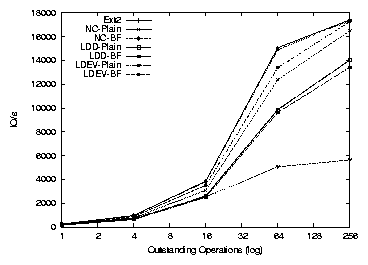

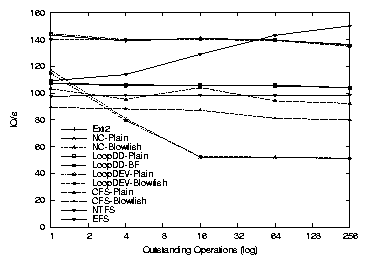

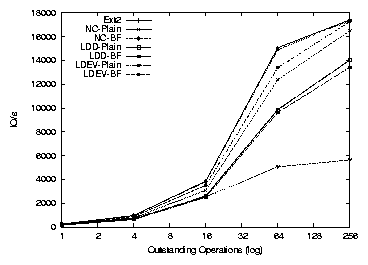

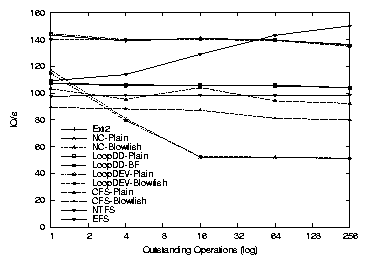

3.9 PGMeter: I/O operations per second

(a) Workstation

(b) File server

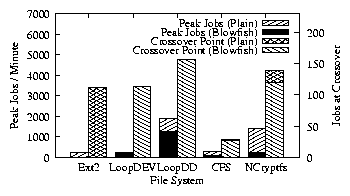

3.10 AIM7 results for the workstation

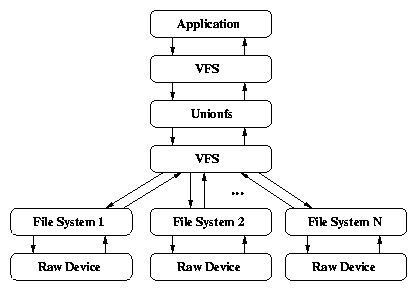

4.1 Call paths of fanout file systems

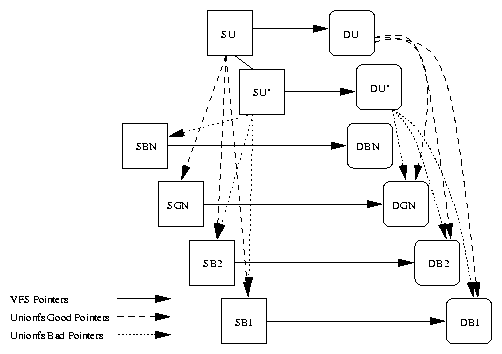

5.1 VFS structures for a sandboxed Unionfs

5.2 The NC-Tools re-key dialog

List of Tables

3.1 Peformance related features

3.2 Workstation raw encryption speeds for AES, Blowfish and 3DES

\LARGE{{Acknowledgments}}

Acknowledgments

The largest thanks go to Mariya who has endured the countless days,

nights, and weekends in the lab that went into this work. My advisor,

Erez Zadok, has always been available, helpful, and without him I

would not have been able to complete this research. I performed the

benchmarking of cryptographic file systems with Jay Dave. Puja Gupta,

Harikesavan Krishan, and Mohammad Nayyer Zubair wrote much of the

original Unionfs code that became the basis for snapshotting and

sandboxing.

My other lab mates have also been great to work with. I would be

remiss if I did not acknowledge Kiran Reddy who sat next to me

debugging race conditions for months on end.

My committee members, R. Sekar and Tzi-cker Chiueh, were generous of

their time and provided helpful input and comments on the work.

The anonymous SISW and USENIX reviewers provided very useful comments

that improved the quality of this work.

Jim Hughes, Serge Plotkin, and others at the IEEE SISW workshop also

provided valuable comments.

Anton Altaparmakov and Szakacsits Szabolcs helped us to understand the

behavior of NTFS.

This work was partially made possible thanks to NSF CAREER award

EIA-0133589, NSF award CCR-0310493, and HP/Intel gifts numbers

87128 and 88415.1.

Securing data is more important than ever. As the Internet has become

more pervasive, attacks have grown. Widely-available studies report

millions of dollars of lost revenues due to security breaches

[45,51]. Moreover, the theft of proprietary

information consistently caused the greatest financial loss.

Data loss, either malicious or accidental, also costs millions of

dollars [30,15]. Backup companies

report that on average, 20MB of data takes 30 hours to create and is

worth almost $90,000 [3].

In this report we survey solutions to these two important problems in

storage security. To protect confidentiality we investigate

encryption file systems; and to protect data availability we

investigate versioning and snapshotting systems. We then discuss

operating system improvements that we have made to support our

encryption and snapshotting file systems.

We believe there are three primary factors when evaluating encrypting

file systems: security, performance, and ease-of-use. These concerns

often compete; for example, if a system is too difficult to use, then

users simply circumvent it entirely. Furthermore, if users perceive

encryption to slow down their work, they just turn it off. Though

performance is an important concern when evaluating cryptographic file

systems, no rigorous real-world comparison of their performance has

been done to date.

Riedel described a taxonomy for evaluating the performance and

security that each type of system could theoretically provide

[52]. His evaluation encompassed a broad array of

choices, but because it was not practical to benchmark so many

systems, he only drew theoretical conclusions. In practice, however,

deployed systems interact with disks, caches, and a variety of other

complex system components - all having a dramatic effect on

performance.

We expect cryptographic file systems to become a commodity component

of future operating systems. In this paper we perform a real world

performance comparison between several systems that are used to secure

file systems on laptops, workstations, and moderately-sized file

servers. We also emphasize multi-programming workloads, which are not

often investigated. Multi-programmed workloads are becoming more

important even for single user machines, in which Windowing systems

are often used to run multiple applications concurrently.

We present results from a variety of benchmarks, analyzing the

behavior of file systems for metadata operations, raw I/O operations,

and combined with CPU intensive tasks.

We also use a variety of hardware to ensure that our results are valid

on a range of systems: Pentium vs. Itanium, single CPU vs. SMP, and

IDE vs. SCSI.

We observed general trends with each of the cryptographic file systems

we compared. We also investigated anomalies due to complex,

counterintuitive interactions with the operating system, disks, CPU,

and ciphers. We propose future directions and enhancements to make

systems more reliable and predictable.

We also believe that snapshotting and versioning systems are likely to

become common OS components. We define snapshotting as the act of

creating a consistent view of an entire file system, and versioning as

making backup copies of individual files. Snapshotting systems are

useful for system administrators and multi-user systems, they

essentially provide on-line and instant backups that can protect

against software errors and user actions.

If an attacker modifies data, the system administrator can quickly

revert to the latest snapshot, or easily find the changes made since

the last snapshot.

Versioning, on the other hand, is useful to end users, because they are

able to examine changes to their own files in detail.

Versioning systems are also typically more fine-grained than a

snapshotting system. Versions can be created on each write to a file,

but having so many versions of a file system would have a large

performance impact and be difficult for the system administrator to

manage.

Snapshotting and versioning have uses beyond security.

For example, system administrators need to know what changes are made

to the system while installing new software. If the installation

failed, the software does not work as advertised, or is not needed,

then the administrator often wants to revert to a previous good system

state. In this case a snapshotting system is ideal, because the

entire file system state can easily be reverted to the preinstall

snapshot.

Simple mistakes such as the accidental modification of files could be

ameliorated if users could simply execute a single command to

undo such accidental changes. In this case, a versioning file system

would allow the user to recover this individual file, without

affecting other files (or other users' data).

The rest of this paper is organized as follows.

Chapter 2 surveys cryptographic file systems and

ciphers.

Chapter 3 compares the performance of a cross section of

cryptographic file systems.

Chapter 4 surveys snapshotting and versioning

file systems.

Chapter 5 describes operating system changes we have

made in support of our new file systems.

We conclude in Chapter 6 and present possible future

directions.

Chapter 2

Cryptographic File System Survey

This section describes a range of available techniques of encrypting

data and is organized by increasing levels of abstraction: block-based

systems, native disk file systems, network-based file systems,

stackable file systems, and encryption applications. We conclude this

section by discussing ciphers that are suitable for encrypted storage.

2.1 Block-Based Systems

Block-based encryption systems operate below the file system level,

encrypting one disk block at a time. This is advantageous because

they do not require knowledge of the file system that resides on top

of them, and can even be used for swap partitions or applications that

require access to raw partitions (e.g., database servers). Also, they

do not reveal information about individual files (e.g., sizes) or

directory structure.

2.1.1 Cryptoloop

The Linux loopback device driver presents a file as a block device,

optionally transforming the data before it is written and after it is

read from the native file, usually to provide encryption. Linux

kernels include a cryptographic framework, CryptoAPI [53]

that exports a uniform interface for all ciphers and hashes.

Presently, IPsec and the Cryptoloop driver use these facilities.

We investigated three backing stores for the loopback driver: (1) a

preallocated file created using dd filled with random data,

(2) a raw device (e.g., /dev/hda2), and (3) a sparse backing

file created using truncate(2).

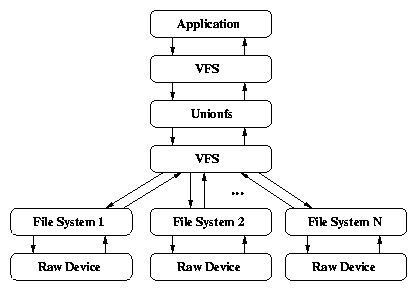

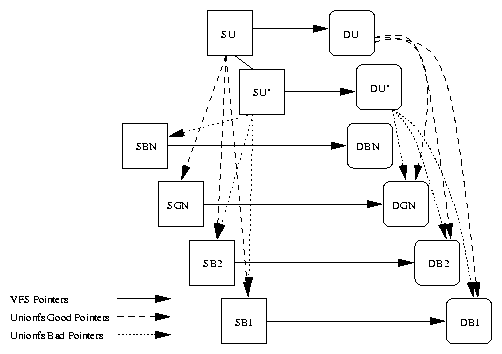

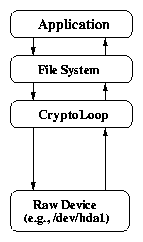

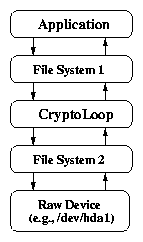

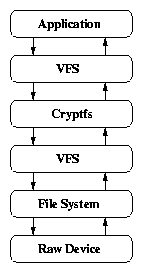

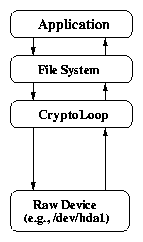

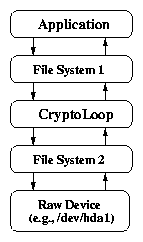

Figure 2.1(a) shows the path that data takes from

an application to the file system, when using a raw device as a

backing store. Figure 2.1(b) shows the path when

a file is used as the backing store. The major difference between the

two systems is that there is an additional file system between the

application and the disk when using a file instead of a device. Using

files rather than devices adds performance penalties including cutting

the effective buffer cache in half because blocks are stored in memory

both as encrypted and unencrypted data.

Each of these methods has advantages and disadvantages related to

security and ease-of-use as well. Using preallocated files is more

secure than using sparse files, because an attacker can not

distinguish random data in the file from encrypted data. However, to

use a preallocated file, space must be set aside for encrypted files

ahead of time.

Using a sparse backing store means that space does not need to be

preallocated for encrypted data, but reveals more about the structure

of the file system (since the attacker knows where and how large the

holes are).

Using a raw device allows an entire disk or partition to be encrypted,

but requires repartitioning, which is more complex than simply

creating a file. Typically there are also a limited number of

partitions available, so on multi-user systems encrypted raw devices

are not as scalable as using files as the backing store.

In Linux 2.6.4, the Cryptoloop driver has been deprecated by dm-crypt.

dm-crypt uses the generic device mapping API provided by 2.6 that is

also used by the LVM subsystem. dm-crypt is similar to Cryptoloop,

and the architectural overview remains the same. dm-crypt still uses

the CryptoAPI, and the on-disk encryption format has remained the

same. There are two key implementation advantages of dm-crypt over

Cryptoloop: (1) encryption is separated from the loopback

functionality, and (2) dm-crypt uses memory pools to prevent memory

allocation from within the block device driver (eliminating deadlocks

under memory pressure).

[Cryptoloop (raw device)]

[Cryptoloop (file)]

[Cryptoloop (file)]

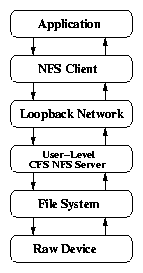

[CFS]

[CFS]

[TCFS]

[TCFS]

[Stackable file systems]

[Stackable file systems]

Figure 2.1: Call paths of storage encryption systems.

The CryptoGraphic Disk driver, available in NetBSD, is similar to the

Linux loopback device, and other loop-device encryption systems, but

it uses a native disk or partition as the backing store

[13]. CGD has a fully featured suite of user-space

configuration utilities that include n-factor authentication and

PKCS#5 for transforming user passwords into encryption keys

[54]. This system is similar to Cryptoloop using a raw

device as a backing store.

GEOM-based disk encryption (GBDE) is based on GEOM, which provides a

modular framework to perform transformations ranging from simple

geometric displacement for disk partitioning, to RAID algorithms, to

cryptographic protection of the stored data. GBDE is a GEOM transform

that enables encrypting an entire disk [28].

GBDE hashes a user-supplied passphrase into 512 bits of key material.

GBDE uses the key material to locate and encrypt a 2048 bit master key

and other metadata on four distinct lock sectors.

When an individual sector is encrypted, the sector number and bits of

the master key are hashed together using MD5 to create a kkey.

A randomly generated key, the sector key, is encrypted with the

kkey, and then written to disk. Finally, the sector's payload

is encrypted with the sector key and written to disk.

This technique, though more complex, is similar to Cryptoloop using a

raw device as a backing store.

SFS is an MSDOS device driver that encrypts an entire

partition [21]. SFS is similar to Cryptoloop using a

raw device as a backing store. Once encrypted, the driver presents a

decrypted view of the encrypted data. This provides the convenient

abstraction of a file system, but relying on MSDOS is risky because

MSDOS provides none of the protections of a modern operating system.

BestCrypt is a commercially available loopback

device driver supporting many ciphers [27]. BestCrypt

supports both Linux and Windows, and uses a normal file as a backing

store (similar to using a preallocated file with Cryptoloop).

vncrypt is the cryptographic disk driver for FreeBSD, based on the

vn(4) driver that provides a disk-like interface to a file. vncrypt

uses a normal file as a backing store (similarly to using a

preallocated file with Cryptoloop) and provides a character device

interface, which FreeBSD uses for file systems and swap devices.

vncrypt is similar to using Cryptoloop with a file as a backing store.

Figure 2.1: Call paths of storage encryption systems.

The CryptoGraphic Disk driver, available in NetBSD, is similar to the

Linux loopback device, and other loop-device encryption systems, but

it uses a native disk or partition as the backing store

[13]. CGD has a fully featured suite of user-space

configuration utilities that include n-factor authentication and

PKCS#5 for transforming user passwords into encryption keys

[54]. This system is similar to Cryptoloop using a raw

device as a backing store.

GEOM-based disk encryption (GBDE) is based on GEOM, which provides a

modular framework to perform transformations ranging from simple

geometric displacement for disk partitioning, to RAID algorithms, to

cryptographic protection of the stored data. GBDE is a GEOM transform

that enables encrypting an entire disk [28].

GBDE hashes a user-supplied passphrase into 512 bits of key material.

GBDE uses the key material to locate and encrypt a 2048 bit master key

and other metadata on four distinct lock sectors.

When an individual sector is encrypted, the sector number and bits of

the master key are hashed together using MD5 to create a kkey.

A randomly generated key, the sector key, is encrypted with the

kkey, and then written to disk. Finally, the sector's payload

is encrypted with the sector key and written to disk.

This technique, though more complex, is similar to Cryptoloop using a

raw device as a backing store.

SFS is an MSDOS device driver that encrypts an entire

partition [21]. SFS is similar to Cryptoloop using a

raw device as a backing store. Once encrypted, the driver presents a

decrypted view of the encrypted data. This provides the convenient

abstraction of a file system, but relying on MSDOS is risky because

MSDOS provides none of the protections of a modern operating system.

BestCrypt is a commercially available loopback

device driver supporting many ciphers [27]. BestCrypt

supports both Linux and Windows, and uses a normal file as a backing

store (similar to using a preallocated file with Cryptoloop).

vncrypt is the cryptographic disk driver for FreeBSD, based on the

vn(4) driver that provides a disk-like interface to a file. vncrypt

uses a normal file as a backing store (similarly to using a

preallocated file with Cryptoloop) and provides a character device

interface, which FreeBSD uses for file systems and swap devices.

vncrypt is similar to using Cryptoloop with a file as a backing store.

2.1.7 OpenBSD vnd

Encrypted file systems support is part of OpenBSD's Vnode Disk Driver,

vnd(4). vnd uses two modes: one bypasses the buffer

cache, the second uses the buffer cache. For encrypted devices, the

buffer cache must be used to ensure cache coherency on unmount. The

only encryption algorithm implemented so far is Blowfish.

vnd is similar to using Cryptoloop with a file as a backing

store.

2.2 Disk-Based File Systems

Disk-based file systems that encrypt data are located at a higher

level of abstraction than block-based systems. These file systems

have access to all per-file and per-directory data, so they can

perform more complex authorization and authentication than block-based

systems, yet at the same time disk-based file systems can control the

physical data layout. This means that disk-based file systems

can limit the amount of information revealed to an attacker about file

size and owner, though in practice these attributes are often still

revealed in order to preserve the file system's on-disk structure. Additionally, since there is no additional layer of

indirection, disk-based file systems can have performance benefits

over other techniques described in this section (including the

loop devices).

EFS is the Encryption File System found in Microsoft Windows, based on

the NT kernel (Windows 2000 and XP) [37]. It is an

extension to NTFS and utilizes Windows authentication methods as well

as Windows ACLs [39,52]. Though EFS is

located in the kernel, it is tightly coupled with user-space DLLs to

perform encryption and the user-space Local Security Authentication

Server for authentication [61]. This prevents EFS from

being used for protecting files or folders in the root or

\winnt directory. Encryption keys are stored on

the disk in a lockbox that is encrypted using the user's login

password. This means that when users change their password, the

lockbox must be re-encrypted. If an administrator changes the user's

password, then all encrypted files become unreadable. Additionally,

for compatibility with Windows 2000, EFS uses DESX

[57] by default and the only other available

cipher is 3DES (included in Windows XP or in the Windows 2000 High

Encryption pack).

StegFS is a file system that employs steganography as well as

encryption [35]. If adversaries inspect the

system, then they only know that there is hidden data, but not its

content or the extent of what is hidden. This is achieved via a

modified Ext2 kernel driver that keeps a separate block-allocation

table per

security level. It is not possible to determine how many

security levels exist without the key to each security level. When

the disk is mounted with an unmodified Ext2 driver, random blocks may

be overwritten, so data is replicated randomly throughout the disk to

avoid data loss. Although StegFS achieves plausible deniability of

data's existence, the performance degradation is a factor of 6-196,

making it impractical for most applications.

2.3 Network Loopback Based Systems

Network-based file systems (NBFSs) operate at a higher level of

abstraction than disk-based file systems, so NBFSs can not control the

on-disk layout of files. NBFSs have two major advantages: (1) they

can operate on top of any file system, and (2) they are more portable

than disk-based file systems. NBFS's major disadvantages are

performance and security. Since each request must travel through the

network stack, more data copies are required and performance suffers.

Security also suffers because NBFS are vulnerable to all of the

weaknesses of the underlying network protocol (usually NFS

[70,60]).

CFS is a cryptographic file system that is implemented as a user-level

NFS server [6]. The cipher and key are specified

when encrypted directories are first created. The CFS daemon is

responsible for providing the owner with access to the encrypted data

via an attach command. The daemon, after verifying the user ID

and key, creates a directory in the mount point directory that acts as

an unencrypted window to the user's encrypted data. Once attached,

the user accesses the attached directory like any other directory.

CFS is a carefully designed, portable file system with a wide choice

of built-in ciphers. Its main problem, however, is performance.

Since it runs in user mode, it must perform many context switches

and data copies between kernel and user space. As can be seen in

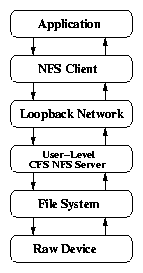

Figure 2.1(c), since CFS has an unmodified NFS client which

communicates with a modified NFS server, it must run only over the

loopback network interface, lo.

TCFS is a cryptographic file system that is implemented as a modified

kernel-mode NFS client. Since it is used in conjunction with an NFS

server, TCFS works transparently with the remote file system. To

encrypt data, a user sets an encrypted attribute on directories and

files within the NFS mount point [8,34]. TCFS

integrates with the UNIX authentication system in lieu of requiring

separate passphrases. It uses a database in /etc/tcfspwdb to

store encrypted user and group keys. Group access to encrypted

resources is limited to a subset of the members of a given UNIX group,

while allowing for a mechanism (called threshold secret

sharing) for reconstructing a group key when a member of a group is

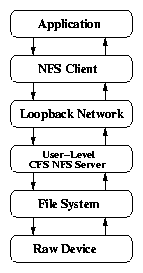

no longer available. As can be seen on the right of Figure

2.1(d), TCFS uses a modified NFS client, which must be

implemented in the kernel. This does, however, allow it to operate over

any network interface and to work with remote servers.

Since TCFS runs on individual clients, and the NFS server is

unmodified this increases scalability because cryptographic processing

takes place on the client instead of the server.

TCFS has several weaknesses that make it less than ideal for

deployment. First, the reliance on login passwords as user keys is not

safe. Also, storing encryption keys on disk in a key database further

reduces security. Finally, TCFS operates only on systems with

Linux kernel 2.2.17 or earlier.

2.4 Stackable File Systems

Stackable file systems are a compromise between kernel-level

disk-based file systems and loopback network file systems. Stackable

file systems can operate on top of any file system; do not copy data

across the user-kernel boundary or through the network stack; and they

are portable to several operating systems

[73].

Cryptfs is the stackable, cryptographic file system and part of the

FiST toolkit [72]. Cryptfs was never designed to be a

secure file system, but rather a proof-of-concept application of FiST

[73]. Cryptfs supports only one cipher and

implements a limited key management scheme. Cryptfs serves as the

basis for several commercial and research systems (e.g., ZIA

[11]). Figure 2.1(e) shows Cryptfs's

operation. A user application invokes a system call through the

Virtual File System (VFS). The VFS calls the stackable file system,

which again invokes the VFS after encrypting or decrypting the data.

The VFS calls the lower level file system, which writes the data to

its backing store.

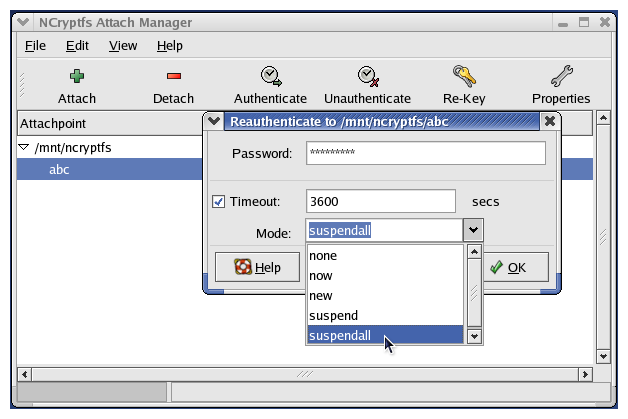

NCryptfs is our stackable cryptographic file system, designed with the

explicit aim of balancing security, convenience, and performance

[69]. NCryptfs allows system administrators and

users to customize NCryptfs according to their specific

needs. NCryptfs supports multiple concurrent authentication methods,

multiple dynamically-loadable ciphers, ad-hoc groups, and

challenge-response authentication. Keys, active sessions, and

authorizations in NCryptfs all have timeouts. NCryptfs can be

configured to transparently suspend and resume processes based on key,

session or authorization validity.

NCryptfs also enhanced the kernel to discard cleartext pages and

notify the file system on process exit in order to expunge invalid

authentication entries.

Zero-Interaction Authentication (ZIA) uses Cryptfs with Rijndael as a

basis for a system that uses physical locality to authorize use on a

laptop [11]. To effectively use file system encryption,

users must provide the system with a passphrase. Normally, after the

user provides the machine with the passphrase, anyone who physically

possess the machine has access to the data.

The laptop running ZIA communicates with an ancillary device that is

always in the physical possession of the user, (e.g., a wrist-watch)

that provides the laptop with the encryption key over a wireless

channel. When the device leaves the vicinity of the laptop,

all information in the file cache is encrypted. When the user

returns, the information is automatically decrypted.

2.5 Applications

File encryption can be performed by applications such as GPG

[31] or crypt(1) that reside above the file system. This

solution, however, is quite inconvenient for users. Each time they

want to access a file, users must manually decrypt or encrypt

it. The more user interaction is required to encrypt or decrypt

files, the more often mistakes are made, resulting in damage to the

file or leaking confidential data [67]. Additionally, the

file may reside in cleartext on disk while the user is actively working

on it.

File encryption can also be integrated into each application (e.g.,

mail clients), but this shifts the burden from users to applications

programmers. Often applications developers do not believe the extra

effort of implementing features is justified when only a small

fraction of users would take advantage of those features.

Even if encryption is deemed an important enough feature to be

integrated into most applications there are still two major problems

with this approach.

First, each additional application that the user must trust to

function correctly reduces the overall security of the system.

Second, since each application may implement encryption slightly

differently it would make using files in separate programs more

difficult.

2.6 Ciphers

For cryptographic file systems there are several ciphers that may be

used, but those of interest are generally symmetric block ciphers.

This is because block ciphers are efficient and versatile.

We discuss DES variants, Blowfish, and Rijndael because they are often

used for file encryption, and are believed to be secure.

There are many other block ciphers available, including CAST, GOST,

IDEA, MARS, Serpent, RC5, RC6, and TwoFish. Most of them have similar

characteristics with varying block and key sizes.

We also discuss tweakable ciphers, a new cipher mode which is

especially suited for encrypted storage.

DES is a block cipher designed by IBM with assistance from the NSA in

the 1970s [57]. DES was the first

encryption algorithm that was published as a standard by NIST with

enough details to be implemented in software. DES uses a 56-bit key,

a 64-bit block size, and can be implemented efficiently in hardware.

DES is no longer considered to be secure. There are several more

secure variants of DES, most commonly 3DES [40]. 3DES uses

three separate DES encryptions with three different keys, increasing

the total key length to 168 bits. 3DES is considered secure for

government communications. DESX is a variant designed by RSA Data

Security that uses a second 64-bit key for

whitening (obscuring) the data before the first round and after the

last round of DES proper, thereby reducing its vulnerability to

brute-force attacks, as well as differential and linear cryptanalysis

[57].

Blowfish is a block cipher designed by Bruce Schneier with a 64-bit

block size and key sizes up to 448 bits

[57]. Blowfish had four design criteria:

speed, compact memory usage, simple operations, and variable security.

Blowfish works best when the key does not change often, as is the case

with file encryption, because the key setup routines require 521

iterations of Blowfish encryption. Blowfish is widely used for file

encryption.

2.6.3 AES (Rijndael)

AES is the successor to DES, selected by a public competition. Though

all of the six finalists were judged to be sufficiently secure for

AES, the final choice for AES was Rijndael based on the composite of

the three selection criteria (security, cost, and algorithm

characteristics) [41].

Since AES has been blessed by the US government and government

purchases will require AES encryption, it is likely that AES will

become more popular.

Rijndael is a block cipher based on the Square cipher that uses

S-boxes (substitution), shifting, and XOR to encrypt 128-bit blocks of

data. Rijndael supports 128, 192, and 256 bit keys.

2.6.4 Tweakable Ciphers

Tweakable block ciphers are new cipher mode (other cipher modes

include electronic codebook (ECB), cipher block chaining (CBC), and cipher

feedback (CFB)) [22]. Tweakable block ciphers

operate on large block sizes (e.g., 512-bytes) and are ideal for

sector level-encryption. The IEEE Security in Storage Working Group

is planning on using the AES encrypt-mix-encrypt (EME) cipher mode for

their project 1619 "Standard Architecture for Encrypted Shared

Storage Media."

As can be seen in Figure 2.2, encrypt-mix-encrypt

essentially divides the sector into encryption-block-size units

(P1, P2, ..., Pn), encrypts each one, mixes it with the result

of all the first round encryptions, and then encrypts the result.

Specifically, each Pi is encrypted once with AES, and then all the

results are exclusive-ored to create M. Tweakable cipher modes do

not use an IV, but rather use a tweak T (T is not directly shown

in the figure, but is a component of M). M is then calculated as

M XOR T. The result from the previous encryptions are

exclusive-ored with M, and then encrypted with AES again. Each

block is additionally exclusive-ored with iL (a constant derived

from EK(0)), before the first encryption round and after the last

encryption round. In sum, Ci = iL XOR EK(EK(iL XOR Pi) XOR M), where M = EK(L XOR P1) XOR ... EK(nL XOR Pn) XOR T.

Unlike CBC, tweakable ciphers have very large error propagation.

Since each output block includes the tweak (presumably based on the

sector) and random information from the first encryption round, small

modifications are propagated throughout the sector.

If there are any modifications to the sector, then they will randomly

affect each bit of the sector. The large error propagation has two

advantages: (1) even a small change to data is more likely to be

noticed, and (2) it is not susceptible to CBC cut-and-paste attacks.

EME ciphers are also more suited to hardware acceleration than

standard CBC mode. In CBC mode to encrypt the i-th data block, the

result from the i-1-th block is required, so to encrypt n blocks,

there is a delay of n units. However, in EME mode only one

synchronization point is needed (to compute M) and only two

encryption delays are required (one for the first round and one for

the second round).

Figure 2.2: L = EK(O) and M = (PPP1 XOR ... PPPn) XOR T, where T is the tweak. Figure taken from Halevi and Rogaway [22].

Figure 2.2: L = EK(O) and M = (PPP1 XOR ... PPPn) XOR T, where T is the tweak. Figure taken from Halevi and Rogaway [22].

Chapter 3

Encryption File System Performance Comparison

To compare the performance of cryptographic systems we chose one

system from each category in Section 2, because we

were interested in evaluating properties of techniques, not specific

systems. We chose benchmarks that measure file system operations, raw

I/O operations, and a simulated user workload. Throughout the

comparisons we ran multi-programmed tests to compare increasingly

parallel workloads and scalability.

We did not benchmark an encryption application, because other programs

can not transparently access encrypted data.

As representatives from each category we chose Cryptoloop, EFS, CFS,

and NCryptfs.

We chose Cryptoloop, EFS, and CFS because they are widely used, and

up-to-date. Cryptoloop can be run on top of raw devices and normal

files, which makes it comparable to a wider range of block-based

systems than a block-based system that uses only files or only block

devices. We chose NCryptfs over Cryptfs because NCryptfs is more

secure.

We also tried to choose systems that run on a single operating system,

so that operating system effects would be consistent. We used Linux

for most systems, but for disk-based file systems we chose Windows.

There was no suitable and widely-used disk-based solution for Linux,

large part because block-based systems are generally used on Linux.

From previous studies, we also knew that TCFS and BestCrypt had

performance problems and we therefore omitted them

[69].

We chose workloads that stressed file system and I/O operations,

particularly when there are multiple users. We did not use a compile

benchmark or other similar workloads, because they do not effectively

measure file system performance under heavy loads [65].

In Section 3.1 we describe our experimental setup.

In Section 3.2 we report on PostMark, a benchmark that

exercises file system meta-data operations such as lookup, create,

delete, and append.

In Section 3.3 we report on PGMeter, a benchmark that

exercises raw I/O throughput.

In Section 3.4 we report on AIM Suite VII, a benchmark

that simulates large multiuser workloads.

Finally, in Section 3.5 we report other interesting

results.

3.1 Experimental Setup

To provide a basis for comparison we also perform benchmarks on Ext2

and NTFS. Ext2 is the baseline for Cryptoloop, CFS, and NCryptfs.

NTFS is the baseline for EFS.

We chose Ext2 rather than other Linux file systems because it is

widely used and well-tested. We chose Ext2 rather than the Ext3

journaling file system, because the loopback device's interaction with

Ext3 has the effect of disabling journaling. For performance reasons,

the default journaling mode of Ext3 does not journal data, but rather

only journals meta-data. When a loopback device is used with a

backing store in an Ext3 file, the Ext3 file system contained inside

the file does journal meta-data, but it writes the journal to the

lower-level Ext3 file as data, which is not journaled.

Additionally, journaling file systems create more complicated I/O

effects by writing to the journal as well as normal file system data.

We used the following configurations:

- A vanilla Ext2 file system.

- A loopback device using a raw partition as a backing store.

Ext2 is used inside the loop device. We refer to this

configuration as LOOPDEV.

- A loopback device using a preallocated backing store. Both

the underlying file system and the file system contained

within the loop device are Ext2. We refer to this

configuration as LOOPDD.

- CFS using an Ext2 file system as a backing store.

- A vanilla NTFS file system.

- An encrypted folder within an NTFS file system (EFS).

- NCryptfs stacked on top of an Ext2 file system.

3.1.1 System Setup

For Cryptoloop and NCryptfs we used four ciphers: the Null (identity)

transformation to demonstrate the overhead of the technique without

cryptography; Blowfish with a 128-bit key to demonstrate

overhead with a cipher commonly used in file systems; AES

with a 128-bit key because AES is the successor to DES

[12]; and 3DES because it is an often-used cipher

and it is the only cipher that all of the tested systems supported.

We chose to use 128-bit keys for Blowfish and AES, because that is the

default key size for many systems. For CFS we used Null, Blowfish, and

3DES (we did not use AES because CFS only supports 64-bit ciphers).

For EFS we used only 3DES, since the only other available cipher is

DESX.

| Feature | LOOPDEV | LOOPDD | EFS | CFS | NCryptfs |

| 1 | Category | Block Based | Block Based | Disk FS | NFS | Stackable FS |

| 2 | Location | Kernel | Kernel | Hybrid | User Space | Kernel |

| 3 | Buffering | Single | Double | Single | Double | Double |

| 4 | Encryption unit | 512B | 512B | 512B | 8B | 4KB/16KB |

| 5 | Encryption mode | CBC | CBC | CBC (?) | OFB+ECB | CTS/CFB |

| 6 | Write Mode | Sync,Async | Async Only | Sync,Async | Async Only | Async only |

| | | | | | Write-Through |

Table 3.1: Peformance related features.

In Table 3.1 we summarize various system features that

affect performance.

1. Category LOOPDEV and LOOPDD

use a block-based approach. EFS is implemented as an

extension to the NTFS disk-based file system. CFS is

implemented as a user-space NFS server. NCryptfs is a

stackable file system.

2. Location LOOPDEV, LOOPDD, and NCryptfs

are implemented in the kernel. EFS uses a hybrid

approach, relying on user-space DLLs for some cryptographic

functions. CFS is implemented in user space.

3. Buffering LOOPDEV and EFS keep only one

copy of the data in memory. LOOPDD, CFS, and

NCryptfs have both encrypted and decrypted data in memory.

Double buffering effectively cuts the buffer cache size in half.

4. Encryption unit LOOPDEV, LOOPDD, and

EFS use a disk block as their unit of encryption. This

defaults to 512 bytes for our tested systems. CFS uses the

cipher block size as its encryption unit and only supports

ciphers with 64-bit blocks. NCryptfs uses the PAGE_SIZE as

the unit of encryption: on the i386 this is 4KB and on the

Itanium this defaults to 16KB.

5. Encryption mode LOOPDEV and LOOPDD use

CBC encryption. The public literature does not state what

mode of encryption EFS uses, though it is most probably CBC

mode because: (1) the Microsoft CryptoAPI uses CBC mode by

default, and (2) it has a fixed block size that is accommodating

to CBC. NCryptfs uses cipher text stealing (CTS) to encrypt data

of arbitrary length to data of the same length.

CFS does not use standard cipher modes, but rather a hybrid of

ECB and OFB.

Chaining modes (e.g., CBC) do not allow direct random access to

files, because to read or write from the middle of a file all

the previous data must be decrypted or encrypted first.

However, ECB mode permits a cryptanalyst to do structural

analysis.

CFS solves this by doing the following: when an attach is

created, half a megabyte of pseudo-random data is generated

using OFB mode and written to a mask file. Before data

is written to a data file, it is XORed with the contents of

the mask file at the same offset as the data (modulo the size

of the mask file), then encrypted with ECB mode. To read data

this process is simply reversed.

This method is used to

allow uniform access time to any portion of the file while

preventing structural analysis.

NCryptfs and Cryptoloop achieve the same effect using initialization

vectors (IVs) and CBC on pages and blocks rather than the whole file.

For Cryptoloop, CFS, and NCryptfs we adapted the Blowfish,

AES, and 3DES implementations from OpenSSL 0.9.7b to minimize

effects of different cipher implementations [46].

Cryptoloop uses CBC mode encryption for all operations.

NCryptfs uses CTS to ensure that an arbitrary length buffer

can be encrypted to a buffer of the same length

[57]. NCryptfs uses CTS because

size changing algorithms add complexity and overhead to

stackable file systems [71]. CTS mode

differs from CBC mode only for the last two blocks of data.

For buffers that are shorter than the block size of the

cipher, NCryptfs uses CFB, since CTS requires at least one

full block of data. We did not change the mode of encryption

that CFS uses.

6. Write Mode LOOPDD does not use a

synchronous file as its backing store, therefore all writes

become asynchronous. CFS uses NFSv2 where all writes

on the server must be synchronous, but CFS violates the

specification by opening all files asynchronously.

Due to VFS calling conventions, NCryptfs does not

cause the lower-level file system to use write synchronously.

Asynchronous writes improve performance, but at the expense of

reliability. NCryptfs uses a write-through strategy: whenever

a write system call is issued, NCryptfs passes it to

the lower-level file system. The lower-level file system may

not do any I/O, but NCryptfs still encrypts data.

We ran our benchmarks on two machines: the first represents a

workstation or a small work group file server, and the second represents

a mid-range file server.

The workstation machine is a 1.7Ghz Pentium IV with 512MB of RAM. In

this configuration all experiments took place on a 20GB 7200 RPM

Western Digital Caviar IDE disk. For Cryptoloop, CFS, and NCryptfs we

used Red Hat Linux 9 with a vanilla Linux 2.4.21 kernel. For EFS we

used Windows XP (Service pack 1) with high encryption enabled for EFS.

Our file server machine is a 2 CPU 900Mhz Itanium 2 McKinley

(hereafter we refer to this CPU as an Itanium) with 8GB of RAM running Red

Hat Linux 2.1 Advanced Server with a vanilla SMP Linux 2.4.21 kernel.

All experiments took place on a Seagate 73GB 10,000 RPM Cheetah

Ultra160 SCSI disk. Only Ext2, Cryptoloop, and NCryptfs were tested

on this configuration. We did not test CFS because its file handle

and encryption code are not 64-bit safe. We did not test NTFS or EFS

because 64-bit Windows is not yet commonly used on the Itanium

platform.

All tests were performed on a cold cache, achieved by unmounting and

remounting the file systems between iterations. The tests were

located on a dedicated partition in the outer sectors of the disk to

minimize ZCAV and other I/O effects [14].

For multi-process tests we report the elapsed time as the maximum

elapsed time of all processes, which shows how long it takes to

actually get the allotted work completed. We report the system time

as the sum of the system times of each process and kernel thread

involved in the test. For LOOPDD we add the CPU time of the

kernel thread used by the loopback device to perform encryption. For

LOOPDEV we add in the CPU time used by

kupdated, because encryption takes place when syncing dirty

buffers. Finally, for CFS we add the user and system time used by

cfsd, because this is CPU time used on behalf of the process.

As expected there were no significant variations in the user time of

the benchmark tools, because no changes took place in the user

code. We do not report user times for these tests because we do not

vary the amount of work done in the user process.

We ran all tests several times, and we report instances where our

computed standard deviations were more than 5% of the mean.

Throughout this paper, if two values are within 5%, we do not

consider that a significant difference.

3.2 PostMark

PostMark focuses on stressing the file system by performing a series

of file system operations such as directory lookups, creations,

appends, and deletions on small files. A large number of small files

is common in electronic mail and news servers where multiple users are

randomly modifying small files. We configured PostMark to create

20,000 files and perform 200,000 transactions in 200 subdirectories.

To simulate many concurrent users, we ran each configuration with 1,

2, 4, 8, 16, and 32 concurrent PostMark processes. The total number

of transactions, initial files, and subdirectories was divided evenly

among each process. We chose the above parameters for the number of

files and transactions as they are typically used and recommended for

file system benchmarks [29,66]. We

used many subdirectories for each process, so that the work could be

divided without causing the number of entries in each directory to

affect the results (Ext2 uses a linear directory structure). We ran

each test at least ten times.

Through this test we demonstrate the overhead of file system

operations for each system. First we discuss the results on our

workstation configuration in Section 3.2.1. Next, we

discuss the results on our file server configuration in Section

3.2.2.

3.2.1 Workstation Results

Ext2

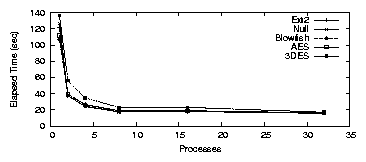

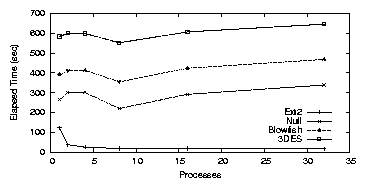

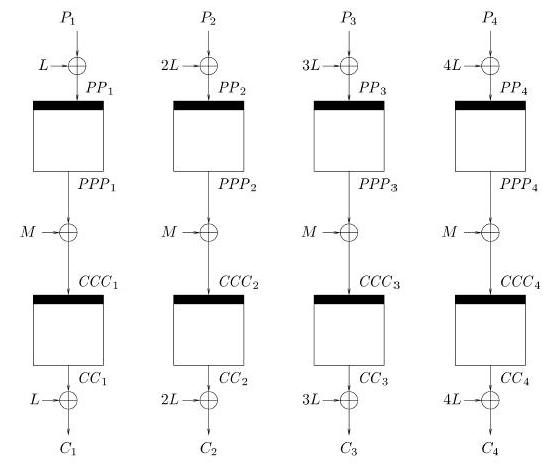

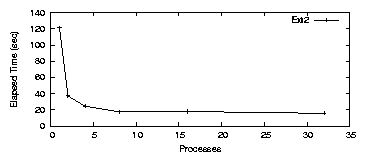

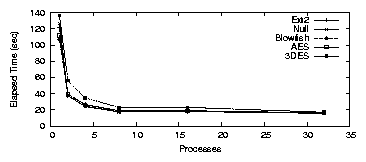

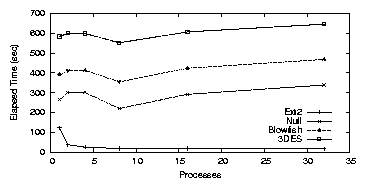

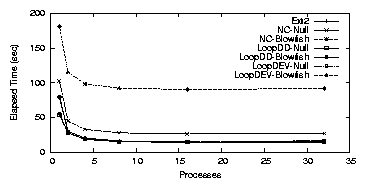

Figure 3.1 shows elapsed time results for Ext2.

For a single process, Ext2 ran for 121.5 seconds, used 12.2 seconds of

system time, and average CPU utilization was 32.1%. When a second

process was added, elapsed time dropped to 37.1 seconds, less than

half of the original time. The change in system time was negligible

at 12.5 seconds. System time remaining relatively constant is to be

expected because the total amount of work remains fixed. The average

CPU utilization was 72.1%. For four processes the elapsed time was

17.4 seconds, system time was 9.9 seconds, and CPU utilization was

79.4%. After this point the improvement levels off as the CPU and

disk became saturated with requests.

Figure 3.1: PostMark: Ext2 elapsed time for the workstation

LOOPDEV

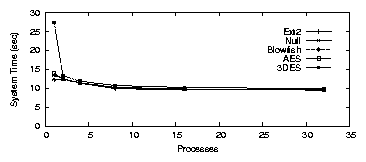

Figure 3.2(a) shows elapsed time results for

LOOPDEV, which follows the same general trend as Ext2. The

single process test is dominated by I/O and subsequent tests improve

substantially, until the CPU is saturated. A single process took

126.5 seconds for Null, 106.7 seconds for Blowfish, 111.8 seconds for

AES, and 136.3 seconds for 3DES.

The overhead over Ext2 is 4.2% for Null, -12.2% for Blowfish,

-8.0% for AES, and 12.25% for 3DES.

The fact that Blowfish and AES are faster than Ext2 is an artifact of

the disks we used. When we ran the experiment on

a ramdisk, a SCSI disk, or a slower IDE disk, the results were as

expected. Ext2 was fastest, followed by Null, Blowfish, AES, and

3DES.

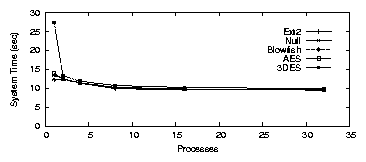

The system times, shown in Figure 3.2(b), were

12.4, 13.5, 14.0, and 27.4 seconds for Null, Blowfish, AES and 3DES,

respectively. The average CPU utilization was 31.6%, 38.3%, 36.6%,

40.0% for Null, Blowfish, AES and 3DES, respectively. When there

were eight concurrent processes, the elapsed times were 17.3, 18.4,

18.8, and 22.8 seconds, respectively. The overheads were

1.0-32.2% for eight processes. The average CPU utilization

ranged from 64.1-80.1%. The decline in system time was unexpected,

and is due to the decreased elapsed time. Since dirty buffers have

a shorter lifetime when elapsed time decreases, kupdated does

not flush the short-lived buffers, thereby reducing the amount of

system time spent during the test.

[Elapsed time]

Figure 3.1: PostMark: Ext2 elapsed time for the workstation

LOOPDEV

Figure 3.2(a) shows elapsed time results for

LOOPDEV, which follows the same general trend as Ext2. The

single process test is dominated by I/O and subsequent tests improve

substantially, until the CPU is saturated. A single process took

126.5 seconds for Null, 106.7 seconds for Blowfish, 111.8 seconds for

AES, and 136.3 seconds for 3DES.

The overhead over Ext2 is 4.2% for Null, -12.2% for Blowfish,

-8.0% for AES, and 12.25% for 3DES.

The fact that Blowfish and AES are faster than Ext2 is an artifact of

the disks we used. When we ran the experiment on

a ramdisk, a SCSI disk, or a slower IDE disk, the results were as

expected. Ext2 was fastest, followed by Null, Blowfish, AES, and

3DES.

The system times, shown in Figure 3.2(b), were

12.4, 13.5, 14.0, and 27.4 seconds for Null, Blowfish, AES and 3DES,

respectively. The average CPU utilization was 31.6%, 38.3%, 36.6%,

40.0% for Null, Blowfish, AES and 3DES, respectively. When there

were eight concurrent processes, the elapsed times were 17.3, 18.4,

18.8, and 22.8 seconds, respectively. The overheads were

1.0-32.2% for eight processes. The average CPU utilization

ranged from 64.1-80.1%. The decline in system time was unexpected,

and is due to the decreased elapsed time. Since dirty buffers have

a shorter lifetime when elapsed time decreases, kupdated does

not flush the short-lived buffers, thereby reducing the amount of

system time spent during the test.

[Elapsed time]

[System time]

[System time]

Figure 3.2: PostMark: LOOPDEV for the workstation

The results fit the pattern established by Ext2 in that once the CPU

becomes saturated, the elapsed time remains constant. Again, the

largest benefit is seen when going from one to two processes.

Encryption does not have a large user visible impact, even for this

intense workload.

LOOPDD

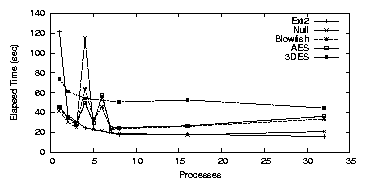

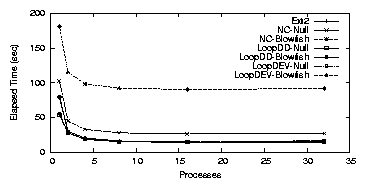

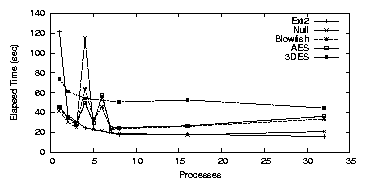

Figure 3.3 shows the elapsed time results for the

LOOPDD configuration. The elapsed times for a single process

are 41.4 seconds for Null, 44.5 for Blowfish, 45.7 for AES, and 73.9

for 3DES. For eight processes, the elapsed times decrease to 23.1,

23.5, 24.5, and 50.39 seconds for Null, Blowfish, AES, and 3DES,

respectively.

Figure 3.2: PostMark: LOOPDEV for the workstation

The results fit the pattern established by Ext2 in that once the CPU

becomes saturated, the elapsed time remains constant. Again, the

largest benefit is seen when going from one to two processes.

Encryption does not have a large user visible impact, even for this

intense workload.

LOOPDD

Figure 3.3 shows the elapsed time results for the

LOOPDD configuration. The elapsed times for a single process

are 41.4 seconds for Null, 44.5 for Blowfish, 45.7 for AES, and 73.9

for 3DES. For eight processes, the elapsed times decrease to 23.1,

23.5, 24.5, and 50.39 seconds for Null, Blowfish, AES, and 3DES,

respectively.

Figure 3.3: Note: Null, Blowfish, and AES additionally have points for 1-8 in one process increments rather than exponentially increasing processes.

With LOOPDD we noticed an anomaly at four processes. We have

repeated this test over 50 times and the standard deviation remains

high at 40% of the mean for Null, AES, and Blowfish. There is also

an inversion between the elapsed time and the cipher speed. The Null

cipher is the slowest, followed by Blowfish, and then AES. 3DES is

not affected by this anomaly.

We have investigated this anomaly in several ways. We know this to be

an I/O effect, because the system time remains constant and when the

test is run with a ram disk, the anomaly disappears. We also ran the

test for the surrounding points at three, five, six, and seven

concurrent processes. For Null there were no additional anomalies,

but for Blowfish and AES the results for 4-6 process were erratic and

standard deviations ranged from 25-67%.

We have determined the anomaly's cause to be an interaction with the Linux

buffer flushing daemon, bdflush. Flushing dirty buffers in Linux is

controlled by three parameters: nfract, nfract_stop, and

nfract_sync. Each parameter is a percentage of the total number of

buffers available on the system. When a buffer is marked dirty, the

system checks if the number of dirty buffers exceeds nfract% (by

default 30%) of the total number of buffers. If so,

bdflush is woken up and begins to sync buffers until only

nfract_stop% of the system buffers are dirty (by default 20%).

After waking up bdflush, the kernel checks if more than

nfract_sync% of the total buffers are dirty (by default 60%). If

so, then the process synchronously flushes NRSYNC buffers

(hard coded to 32) before returning control to the process. The

nfract_sync is designed to throttle heavy writers and ensure that

enough clean buffers are available.

We changed the nfract_sync parameter to 90% and reran this test.

Figure 3.4 shows the results when using

nfract_sync=90% and the anomaly is gone.

Since the Null processes are writing to the disk so quickly, they

end up causing the number of dirty buffers to go over the

nfract_sync threshold. The four process test is very close to this

threshold, which accounts for the high standard deviation.

When more CPU is used, either through more processes or slower

ciphers, the rate of writes is slowed, and this this effect is not

encountered.

We have confirmed our analysis by increasing the RAM on the machine

from 512MB to 1024MB, and again the elapsed time anomaly disappeared.

Figure 3.3: Note: Null, Blowfish, and AES additionally have points for 1-8 in one process increments rather than exponentially increasing processes.

With LOOPDD we noticed an anomaly at four processes. We have

repeated this test over 50 times and the standard deviation remains

high at 40% of the mean for Null, AES, and Blowfish. There is also

an inversion between the elapsed time and the cipher speed. The Null

cipher is the slowest, followed by Blowfish, and then AES. 3DES is

not affected by this anomaly.

We have investigated this anomaly in several ways. We know this to be

an I/O effect, because the system time remains constant and when the

test is run with a ram disk, the anomaly disappears. We also ran the

test for the surrounding points at three, five, six, and seven

concurrent processes. For Null there were no additional anomalies,

but for Blowfish and AES the results for 4-6 process were erratic and

standard deviations ranged from 25-67%.

We have determined the anomaly's cause to be an interaction with the Linux

buffer flushing daemon, bdflush. Flushing dirty buffers in Linux is

controlled by three parameters: nfract, nfract_stop, and

nfract_sync. Each parameter is a percentage of the total number of

buffers available on the system. When a buffer is marked dirty, the

system checks if the number of dirty buffers exceeds nfract% (by

default 30%) of the total number of buffers. If so,

bdflush is woken up and begins to sync buffers until only

nfract_stop% of the system buffers are dirty (by default 20%).

After waking up bdflush, the kernel checks if more than

nfract_sync% of the total buffers are dirty (by default 60%). If

so, then the process synchronously flushes NRSYNC buffers

(hard coded to 32) before returning control to the process. The

nfract_sync is designed to throttle heavy writers and ensure that

enough clean buffers are available.

We changed the nfract_sync parameter to 90% and reran this test.

Figure 3.4 shows the results when using

nfract_sync=90% and the anomaly is gone.

Since the Null processes are writing to the disk so quickly, they

end up causing the number of dirty buffers to go over the

nfract_sync threshold. The four process test is very close to this

threshold, which accounts for the high standard deviation.

When more CPU is used, either through more processes or slower

ciphers, the rate of writes is slowed, and this this effect is not

encountered.

We have confirmed our analysis by increasing the RAM on the machine

from 512MB to 1024MB, and again the elapsed time anomaly disappeared.

Figure 3.4: Note: 3DES is not included because it does not display this anomaly.

The other major difference between LOOPDD and

LOOPDEV is that LOOPDD uses more system time. This

is for two reasons. First, LOOPDD traverses the file system

code twice, once for the test file system and once for the file system

containing the backing store. Second, LOOPDD effectively

cuts the buffer and page caches in half by double buffering. Cutting

the buffer cache in half means that fewer cached cleartext pages are

available so more encryption operations must take place.

The system time used for LOOPDD is also relatively constant,

regardless of how many processes are used.

We instrumented a CryptoAPI cipher to count the number of bytes

encrypted, and determined that unlike LOOPDEV, the number of

encryptions and decryptions does not significantly change. The

LOOPDEV system marks the buffers dirty and issues an I/O

request to write that buffer. If another write comes through before

the I/O is completed, then the writes are coalesced into a single I/O

operation. When LOOPDD writes a buffer it adds the buffer to

the end of a queue for the loop thread to write. If the same buffer

is written twice, the buffer is added to the queue twice, and hence

encrypted twice. This prevents searching through the queue, and since

the lower-level file system may in fact coalesce the writes, this does

not have a large impact on elapsed time.

The results show that LOOPDD systems have several complex

interactions with many components of the system that are difficult to

explain or predict. When maximal performance and predictability is a

consideration, LOOPDEV should be used instead of

LOOPDD.

CFS

Figure 3.5 shows CFS elapsed times, which are

relatively constant no matter how many processes are running. Since

CFS is a single threaded NFS server, this result was expected.

System time also remained constant for each test: 146.7-165.3 seconds

for Null, 298.9-309.4 seconds for Blowfish, and 505.7-527.8 seconds for

3DES.

There is a decrease in elapsed time when there are eight concurrent

processes (27.0% for Null, 14.1% for Blowfish, and 7.8% for 3DES),

but the results return to their normal progression for 16 processes.

The dip at eight processes occurs because I/O time decreases. At this

point the request stream to our particular disk optimally interleaves

with CPU usage. System time remains the same. When this test is run

inside of a ram disk or on different hardware, this anomaly

disappears.

Figure 3.4: Note: 3DES is not included because it does not display this anomaly.

The other major difference between LOOPDD and

LOOPDEV is that LOOPDD uses more system time. This

is for two reasons. First, LOOPDD traverses the file system

code twice, once for the test file system and once for the file system

containing the backing store. Second, LOOPDD effectively

cuts the buffer and page caches in half by double buffering. Cutting

the buffer cache in half means that fewer cached cleartext pages are

available so more encryption operations must take place.

The system time used for LOOPDD is also relatively constant,

regardless of how many processes are used.

We instrumented a CryptoAPI cipher to count the number of bytes

encrypted, and determined that unlike LOOPDEV, the number of

encryptions and decryptions does not significantly change. The

LOOPDEV system marks the buffers dirty and issues an I/O

request to write that buffer. If another write comes through before

the I/O is completed, then the writes are coalesced into a single I/O

operation. When LOOPDD writes a buffer it adds the buffer to

the end of a queue for the loop thread to write. If the same buffer

is written twice, the buffer is added to the queue twice, and hence

encrypted twice. This prevents searching through the queue, and since

the lower-level file system may in fact coalesce the writes, this does

not have a large impact on elapsed time.

The results show that LOOPDD systems have several complex

interactions with many components of the system that are difficult to

explain or predict. When maximal performance and predictability is a

consideration, LOOPDEV should be used instead of

LOOPDD.

CFS

Figure 3.5 shows CFS elapsed times, which are

relatively constant no matter how many processes are running. Since

CFS is a single threaded NFS server, this result was expected.

System time also remained constant for each test: 146.7-165.3 seconds

for Null, 298.9-309.4 seconds for Blowfish, and 505.7-527.8 seconds for

3DES.

There is a decrease in elapsed time when there are eight concurrent

processes (27.0% for Null, 14.1% for Blowfish, and 7.8% for 3DES),

but the results return to their normal progression for 16 processes.

The dip at eight processes occurs because I/O time decreases. At this

point the request stream to our particular disk optimally interleaves

with CPU usage. System time remains the same. When this test is run

inside of a ram disk or on different hardware, this anomaly

disappears.

Figure 3.5: PostMark: CFS elapsed time for the workstation

We conclude that both the user-space and single-threaded architecture

are bottlenecks for CFS. The single threaded architecture prevents

CFS from making use of parallelism, while the user-space architecture

causes CFS to consume more system time for data copies to and from

user space and through the network stack.

[Elapsed time]

Figure 3.5: PostMark: CFS elapsed time for the workstation

We conclude that both the user-space and single-threaded architecture

are bottlenecks for CFS. The single threaded architecture prevents

CFS from making use of parallelism, while the user-space architecture

causes CFS to consume more system time for data copies to and from

user space and through the network stack.

[Elapsed time]

[System time]

[System time]

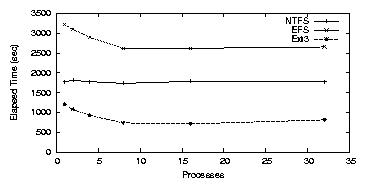

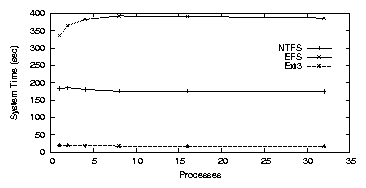

Figure 3.6: PostMark: NTFS on the workstation.

NTFS

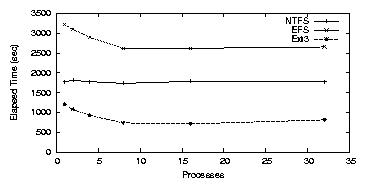

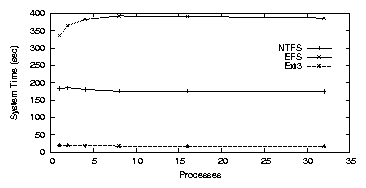

Figure 3.6(a) shows that NTFS performs significantly

worse than Ext2/3 for the PostMark benchmark. The elapsed time for NTFS

was relatively constant regardless of the number of processes, ranging

from 28.9-30.2 minutes. Katcher reported that NTFS performs poorly for

this workload when there are many concurrent files

[29]. Unlike Ext2, NTFS is a journaling file

system. To compare NTFS with a similar file system we ran this

benchmark for Ext3 with journaling enabled for both meta-data and

data. Ext2 took 2.1 minutes for one process, and Ext3 took 20.0

minutes. We hypothesize that the journaling behavior of NTFS

negatively impacts its performance for PostMark operations.

NTFS has many more synchronous writes than Ext3, and all writes have

three phases that must be synchronous. First, changes to the

meta-data are written to allow a read to check for corruption or

crashes. Second, the actual data is written. Third, the metadata is

fixed, again with a synchronous write. Between the first and last

step, the metadata may not be read or written to, because it is in

effect invalid.

Furthermore, the on-disk layout of NTFS is more prone to seeking than

that of Ext2 (Ext3 has an identical layout). NTFS stores most

metadata information in the master file table (MFT), which is

placed in the first 12% of the partition. Ext2 has group

descriptors, block bitmaps, inode bitmaps, and inode tables for each

cylinder group. This means that a file's meta-data is physically

closer to its data in Ext2 than in NTFS.

When an encrypted folder is used for a single process, elapsed time

increases to 57.2 minutes, a 98% overhead over NTFS. The right of

Figure 3.6(b) shows that for a single process, system

time increases to 335.2 seconds, a 82.9% overhead over NTFS. We

have observed that unlike NTFS, EFS is able to benefit from

parallelism when multiple processes are used under this load.

When there are eight concurrent processes, the elapsed time

decreases to 43.5 minutes, and the system time increases to

386.5 seconds. No additional throughput is achieved after eight

processes. We conclude that NTFS, and hence EFS, is not suitable

for busy workloads with a large number of concurrent files.

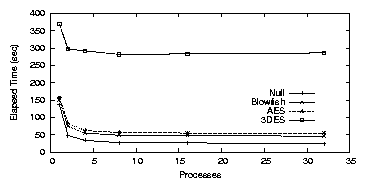

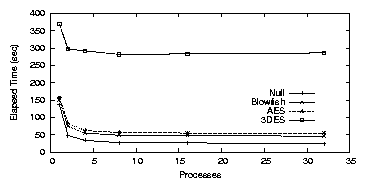

NCryptfs

Figure 3.7 shows that for a single process running

under NCryptfs, the elapsed times were 136.2, 150.0, 156.5, and 368.9

seconds for Null, Blowfish, AES, and 3DES, respectively. System times

were 22.2, 44.0, 54.3, and 287.5 seconds for Null, Blowfish, AES, and

3DES, respectively.

This is higher than for LOOPDD and LOOPDEV because

NCryptfs uses write-through, so all writes cause an encryption

operation to take place. The system time is lower than in CFS because

CFS must copy data to and from user-space, and through the

network stack.

Average CPU utilization was 36.4% for Null, 47.5% for Blowfish,

52.2% for AES, and 84.9% for 3DES. For eight processes, elapsed

time was 27.0, 48.2, 56.2, and 281.5 seconds for Null, Blowfish, AES,

and 3DES, respectively. CPU utilization was 90.2%, 96.3%, 98.0%,

and 99.3% for Null, Blowfish, AES, and 3DES, respectively. This high

average CPU utilization indicates that CPU is the bottleneck,

preventing greater throughput from being achieved.

Figure 3.6: PostMark: NTFS on the workstation.

NTFS

Figure 3.6(a) shows that NTFS performs significantly

worse than Ext2/3 for the PostMark benchmark. The elapsed time for NTFS

was relatively constant regardless of the number of processes, ranging

from 28.9-30.2 minutes. Katcher reported that NTFS performs poorly for

this workload when there are many concurrent files

[29]. Unlike Ext2, NTFS is a journaling file

system. To compare NTFS with a similar file system we ran this

benchmark for Ext3 with journaling enabled for both meta-data and

data. Ext2 took 2.1 minutes for one process, and Ext3 took 20.0

minutes. We hypothesize that the journaling behavior of NTFS

negatively impacts its performance for PostMark operations.

NTFS has many more synchronous writes than Ext3, and all writes have

three phases that must be synchronous. First, changes to the

meta-data are written to allow a read to check for corruption or

crashes. Second, the actual data is written. Third, the metadata is

fixed, again with a synchronous write. Between the first and last

step, the metadata may not be read or written to, because it is in

effect invalid.

Furthermore, the on-disk layout of NTFS is more prone to seeking than

that of Ext2 (Ext3 has an identical layout). NTFS stores most

metadata information in the master file table (MFT), which is

placed in the first 12% of the partition. Ext2 has group

descriptors, block bitmaps, inode bitmaps, and inode tables for each

cylinder group. This means that a file's meta-data is physically

closer to its data in Ext2 than in NTFS.

When an encrypted folder is used for a single process, elapsed time

increases to 57.2 minutes, a 98% overhead over NTFS. The right of

Figure 3.6(b) shows that for a single process, system

time increases to 335.2 seconds, a 82.9% overhead over NTFS. We

have observed that unlike NTFS, EFS is able to benefit from

parallelism when multiple processes are used under this load.

When there are eight concurrent processes, the elapsed time

decreases to 43.5 minutes, and the system time increases to

386.5 seconds. No additional throughput is achieved after eight

processes. We conclude that NTFS, and hence EFS, is not suitable

for busy workloads with a large number of concurrent files.

NCryptfs

Figure 3.7 shows that for a single process running

under NCryptfs, the elapsed times were 136.2, 150.0, 156.5, and 368.9

seconds for Null, Blowfish, AES, and 3DES, respectively. System times

were 22.2, 44.0, 54.3, and 287.5 seconds for Null, Blowfish, AES, and

3DES, respectively.

This is higher than for LOOPDD and LOOPDEV because

NCryptfs uses write-through, so all writes cause an encryption

operation to take place. The system time is lower than in CFS because

CFS must copy data to and from user-space, and through the

network stack.

Average CPU utilization was 36.4% for Null, 47.5% for Blowfish,

52.2% for AES, and 84.9% for 3DES. For eight processes, elapsed

time was 27.0, 48.2, 56.2, and 281.5 seconds for Null, Blowfish, AES,

and 3DES, respectively. CPU utilization was 90.2%, 96.3%, 98.0%,

and 99.3% for Null, Blowfish, AES, and 3DES, respectively. This high

average CPU utilization indicates that CPU is the bottleneck,

preventing greater throughput from being achieved.

Figure 3.7: PostMark: NCryptfs Workstation elapsed time

Ciphers

As expected throughout these tests, 3DES introduces significantly more

overhead than AES or Blowfish. Blowfish was designed with speed in

mind, and a requirement for AES was that it would be faster than 3DES.

In this test AES and Blowfish perform similarly.

We chose to use Blowfish for further benchmarks because it is often

used for file system cryptography and has properties that are useful

for cryptographic file systems.

First, Blowfish supports long key lengths, up to 448-bits. This is

useful for long-term storage because it is necessary to protect

against future attacks.

Second, Blowfish generates tables to speed the actual encryption

operation. Key setup is an expensive operation, which takes 4168

bytes of memory and requires 521 iterations of the Blowfish algorithm

[57]. Since key setup is done infrequently,

this does not place undue burden on the user, but makes it somewhat

more difficult to mount a brute-force attack.

Figure 3.7: PostMark: NCryptfs Workstation elapsed time

Ciphers

As expected throughout these tests, 3DES introduces significantly more

overhead than AES or Blowfish. Blowfish was designed with speed in

mind, and a requirement for AES was that it would be faster than 3DES.

In this test AES and Blowfish perform similarly.

We chose to use Blowfish for further benchmarks because it is often

used for file system cryptography and has properties that are useful

for cryptographic file systems.

First, Blowfish supports long key lengths, up to 448-bits. This is

useful for long-term storage because it is necessary to protect

against future attacks.

Second, Blowfish generates tables to speed the actual encryption

operation. Key setup is an expensive operation, which takes 4168

bytes of memory and requires 521 iterations of the Blowfish algorithm

[57]. Since key setup is done infrequently,

this does not place undue burden on the user, but makes it somewhat

more difficult to mount a brute-force attack.

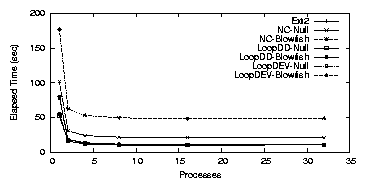

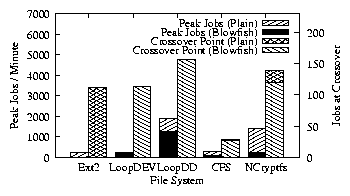

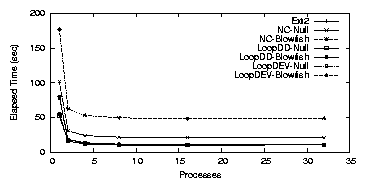

3.2.2 File Server Results

Single CPU Itanium

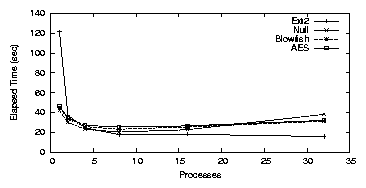

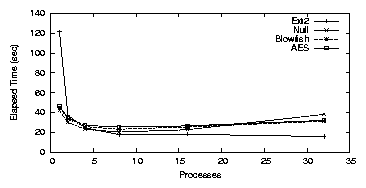

The left of Figure 3.8(a) shows the results when we

ran the PostMark benchmark on our Itanium file server configuration

with one CPU enabled (we used the same SMP kernel as we did with two

CPUS). The trend is similar to that seen for the workstation.